Automated Tests

Automated tests can be added to your Interactive Labs to verify they are working as expected. Each time the Lab is changed, the tests are re-run and the results displayed in the dashboard.

Styles of Testing

Interactive Labs can be tested using the following strategies, in descending order of preference:

- Instruction-guided tests, using annotations as needed

- Writing your own tests with cypress commands for labs

- Writing tests with low-level cypress commands

Instruction-guided tests require the least maintenance as a lab evolves and don’t require writing test code in Javascript. Use them where ever possible.

Instruction-Guided Tests

Many Labs can be tested with one Cypress command, performAllLabActions, that inspects the lab instructions for actions a learner should take and then performs them. We call these tests “instruction-guided” because they use your lab instructions to infer how to complete the lab, without you having to write detailed test code. To add an instruction-guided test to your lab, add this code to .cypress/instruction_guided_spec.js:

it("performs all lab actions", () => {

cy.performAllLabActions();

});

This works best when the lab instructions explicity specify every step the learner must take to complete the lab. (Challenges are not yet supported for this reason.)

Actions

Instruction-guided tests can perform the following types of actions:

- Click executable code blocks

- Click copy-to-editor code blocks

- Verify links within the lab environment

Instruction-guided tests make some assumptions by default:

- A code block contains a shell command unless a language is specified on the code block

- A successful code blocks exits with a zero status, and a failing code block exits with a non-zero status

- Any links to web applications within the lab environment (on environments.katacoda.com) respond with HTTP 200 when they are working as expected and some other status if they are not

However, if your lab doesn’t conform to these expectations, you may still be able to annotate it so that instruction-guided tests can successfully test it.

Annotating Lab Instructions

To help an instruction-guided test navigate your lab, you may apply the following annotation patterns. (These annotations build on lab markdown extensions such as the {{execute}} UI shortcut macro.)

Expectedly Failing Commands

Usually, when a code block exits with non-zero status, an instruction-guided test will consider it a failure. But if you expect your command to fail on purpose, you can annotate the code block to say what exit status is expected:

<div data-test-exit-status="1">

```

ls non-existent-file

```{{execute}}

</div>

This could be useful, for example, when employing Error Driven Development to illustrate that a feature or file is missing before creating it:

- Provide a failing code block with the

data-test-exit-statusannotation - Show how to create the feature or file in the lab

- Provide another code block whose contents is the same as the first, but which will succeed at that point in the lab

Error Driven Development shows the learner how to see whether the feature or file is properly present or not.

To render markdown inside of html block tags, you must separate the fenced code from the div with blank lines as shown in this example.

Long-running Commands

Some shell commands will never terminate for the duration of the lab or until the user interrupts them. Examples include top, watch, or development servers running in the foreground.

By default, an instruction-guided test will wait for these commands to exit, and it will fail the test if they do not exit. To tell it not to wait, add the test-no-wait annotation to the execute macro:

```

top

```{{execute test-no-wait}}

The interrupt modifier may be used in the next code block to interrupt the long-running shell command, or the long-running command may be started in a 2nd terminal by adding the T2 modifier and left running.

When using test-no-wait, validate output from the command to ensure it started successfully: surround the code block with a div tag, and add a data-test-output that says what output to look for:

<div data-test-output="Listening on http://0.0.0.0:3000">

```

rails server

```{{execute test-no-wait}}

</div>

To render markdown inside of html block tags, you must separate the fenced code from the div with blank lines as shown in this example.

After starting the rails server command, the test will wait for the output Listening on http://0.0.0.0:3000 and fail if it does not appear. (Currently it looks for the output anywhere in the terminal, not just since the command started, but that may change in the future.) It’s not necessary to include the entire command output in data-test-output, but do specify output that indicates success and that the long-running command is ready for the lab test to continue. Once the test finds this output, it will continue with the next action from the lab instructions.

The test-no-wait and data-test-output must currently be used together; any code block that uses one must also use the other, but that may change in the future.

This feature is not intended to help situations where a command takes a long-time to complete; it is for commands that run more or less “forever.”

The watch command can be used with this annotation pattern, and may be especially helpful in Kubernetes labs where kubectl returns before the configuration change has taken effect (pods replaced, etc). This example waits for the apiserver to be ready, as indicated by “1/1” in the Ready column:

Run this command until the apiserver is ready:

<div data-test-output=" 1/1 ">

```

watch kubectl -n kube-system get pod kube-apiserver-controlplane

```{{execute test-no-wait}}

</div>

When you see "1/1" in the Ready column, type

<kbd>Ctrl</kbd>+<kbd>C</kbd> or click `clear`{{execute interrupt}}`.

It’s important to tell both the learner and the instruction-guided test when to break out of the watch command: this example tells the test with data-test-output and tells the learner in prose. The clear command’s “interrupt” macro will allow both the test and the learner easily break out of the watch command. (Take care to specify a data-test-output string that will not accidentally match some other part of the watch output.)

Commands in a REPL

By default, the performAllLabActions Cypress command will assume that code blocks contain shell commands. But if a language is specified on the code block, the performAllLabActions command will interpret the output of the code block’s execution differently.

```ruby

puts "Hello world!"

```

These languages are currently supported:

| Language | Prompt | Errors |

|---|---|---|

| Bash | See below | |

| Ruby 3 (irb) | “3.2.2 :001 > “ ( /^3.\d+.\d+ :\d{3,} > $/) | [/error/i, /^Traceback (most recent call last)/] |

| Python | ”>>> “ ( /^>>> $/) | [/Traceback (most recent call last)/] |

| SQL | “mysql> “ or “postgres=> “ ( /^[_=a-z0-9]*> $/i) | [/^ERROR \d+ \(/, /^ERROR: /, /^psql: error: /] |

| ↳ PostgreSQL (psql) | “some_database=> “ ( /^[_a-z0-9]+=> $/i) | [/^ERROR: /, /^psql: error: /] |

| ↳ MySQL | “mysql> “ ( /^mysql> $/i) | [/^ERROR \d+ \(/] |

The prompt and error patterns are used to determine when a code block finishes executing and whether it was successful.

Note that using the sql language may enable better highlighting, but using mysql or psql will use more specific prompt and error patterns. Starting with sql and use the more specific patterns only if necessary.

Bash shell is not on the list because it is too difficult to detect errors from output alone. For code blocks with a language of bash or no language, a shell-specific algorithm will be used. This alogrithm appends a command to print the exit status of the last command of each code block.

When transitioning into or out of a REPL, the code block’s language may be different from the prompt that is left for the next code block. For example, when running the interactive python command, the code block is written in bash syntax, but the resulting prompt will be Python’s. Annotate these transitions with a data-switch-to attribute:

<div data-switch-to="python">

```bash

python

```{{execute}}

</div>

Links

By default, an instruction-guided test will assume that links to the lab environment should respond with a HTTP 200 status. To instead validate them by checking for a string in the HTTP response body, add a data-test-contains attribute to the link:

<a href="https://[[HOST_SUBDOMAIN]]-3000-[[KATACODA_HOST]].environments.katacoda.com"

data-test-contains="Ruby on Rails 7.1">check for Ruby on Rails</a>

If you are currently using Markdown syntax for the link, you’ll need to convert it to an HTML-style link, for example:

[text](url)

would become:

<a href="url" data-test-contains="string">text</a>

Writing your own tests

If you are not able to test your lab successfully using instruction-guided tests (above), even after adding annotations, you may still be able to test your lab with some high-level Cypress commands that are designed specifically for interacting with labs.

The performAllLabActions Cypress command works by internally calling many of these same commands.

Cypress

Cypress.io is a complete end-to-end testing tool that can be used to verify that your interactive labs are working as expected.

You can learn more about Cypress at https://docs.cypress.io/guides/overview/why-cypress.html.

Cypress version 13.3.0 is currently supported.

Cypress Commands for Labs

- startLab() - Start the lab by visiting it in the Cypress headless browser

- watchForVsCodeLoaded() - For labs that use the vscode editor layout, start watching for vscode to load in the background so that “Copy to Editor” blocks are not run before vscode is ready

- clickStepActions(title) - Peform actions for the current step only, and verify its title

- clickCurrentStepActions() - Perform all the actions on the current step

- clickCodeBlockContaining(uniqueSubstring) - Find a code block containing

uniqueStringand then callclickCodeBlockwith it - clickCodeBlock($codeBlock) - Click the given code block to run its command and then validate the command return a zero status or behaves as annotated

- clickCopyToEditorContaining(uniqueSubstring) - Find a “Copy to Editor” block containing

uniqueSubstringand then callclickCopyToEditorwith it - clickCopyToEditor($codeBlock) - Click the “Copy to Editor” button for the given code block and then validate that the target file contains the copied code

- followLink($link) - Fetch the link specified by the

hrefattribute on the given HTMLaelement and validate that the response has HTTP 200 status or behaves as annotated

Example detailed test

Here’s an example detailed test using some of the above Cypress commands:

describe("My Test", () => {

it("Starts development server", () => {

cy.startLab();

cy.watchForVsCodeLoaded(); // Don't await this

cy.clickStepActions("Install Dependencies");

cy.clickCodeBlockContaining("npm start");

cy.get("a")

.contains("the link text")

.then($link => cy.followLink($link));

});

});

(Such a test should only be necessary if a instruction-guided test cannot test your lab.)

Writing your own tests vs. instruction-guided tests

Compared with instruction-guided tests, tests that you write will need to be updated more frequently as you change the lab instructions. For example, if you add a code block to the instructions, your test will not run that code block until you update it to run the code block.

To reduce maintenance effort required by your tests, we encourage you refer to code blocks by a unique substring that will not need to be updated every time you refine your instructions. For example, if you ask the user to run apt-get install -y curl in the lab instructions, you may run that code block with the following test code:

cy.clickCodeBlockContaining("apt-get install");

Omitting the -y option and curl package allows you to adjust the option and package list in the instructions without having to also change your test in lock step.

Low-level Cypress Commands

Cypress provides a number of built-in commands that can interact with Cypress’s headless browser.

In addition, the following custom Cypress commands allow you to interact with your lab during the test. This may be useful if your instructions rely on the user to perform actions in a web application in another tab, such as in Google Cloud Console or a Kubernetes Dashboard. You will then need your test to replicate the effect of those actions, for example by running equivalent commands within the lab terminal.

The more detail you encode into your test, the more careful you will need to be to keep it up-to-date with your instructions. For example, if you remove a step that you mistakenly though was unnecessary from your instructions and forget to remove it from your test, your tests will continue to pass despite a problem with the instructions. To avoid that problem, consider verifying the prose instructions you are replicating, so that, if they change, a test failure will alert you to update your test code. For example:

cy.contains("Enter this value into the cloud console for instance XYZ");

cy.terminalType(`cloud instance add --name XYZ --value ${thisValue}`);

| Function | Details | Example |

|---|---|---|

startLab | Start and visit the Lab being tested in the browser | cy.startLab(); |

terminalType | Type and execute commands within the Terminal | cy.terminalType("uname"); |

terminalShouldContain | Wait for the terminal to contain the given text | cy.terminalShouldContain('Linux'); |

terminalShouldNotContain | Wait for the given text to be removed from the terminal. Replaces terminalNotShouldContain. | cy.terminalShouldNotContain('some text'); |

terminalDoesNotContain | Assert that the given text is currently absent from the terminal, without waiting as terminalShouldNotContain does | cy.terminalDoesNotContain('some text'); |

contains | Wait for the Lab page to contain the given text | cy.contains('Start Scenario'); |

terminalShouldHavePath | Wait for the path to be accessible from the terminal | cy.terminalShouldHavePath("/tmp/build-complete.stamp"); |

terminalValueAfter | Return the first space-delimited string after the last occurrence of the given text | cy.terminalValueAfter("exit status="); |

stepHasText | Wait for the Lab instructions to contain the given text | cy.stepHasText("Step 1: ..."); |

These custom Cypress commands can be combined with the built-in Cypress commands, documented on docs.cypress.io.

Creating a Lab’s First Test

- Within the lab you want to test, add a folder called

.cypress. - Within the

.cypressdirectory, create a file namedinstruction_guided_spec.jsto contain the test. - Add the following code to

instruction_guided_spec.js:it("performs all lab actions", () => { cy.performAllLabActions(); }); - Commit the test to Git and push to the remote Git repository to update the lab and trigger a test run:

git commit -m 'Add my first instruction-guided test' .cypress/instruction_guided_spec.js git push - Visit the Dashboard to view the results.

- Add annotations to the instructions to help the test navigate them.

If you create additional tests later, you may add them within .cypress in one or more separate files ending in _spec.js, such as custom_spec.js. Subdirectories are not supported.

A complete example can be found at https://github.com/katacoda/scenario-examples/tree/main/instruction-guided-template.

More examples of using Cypress can be found at https://docs.cypress.io/examples/examples/recipes.html#Fundamentals.

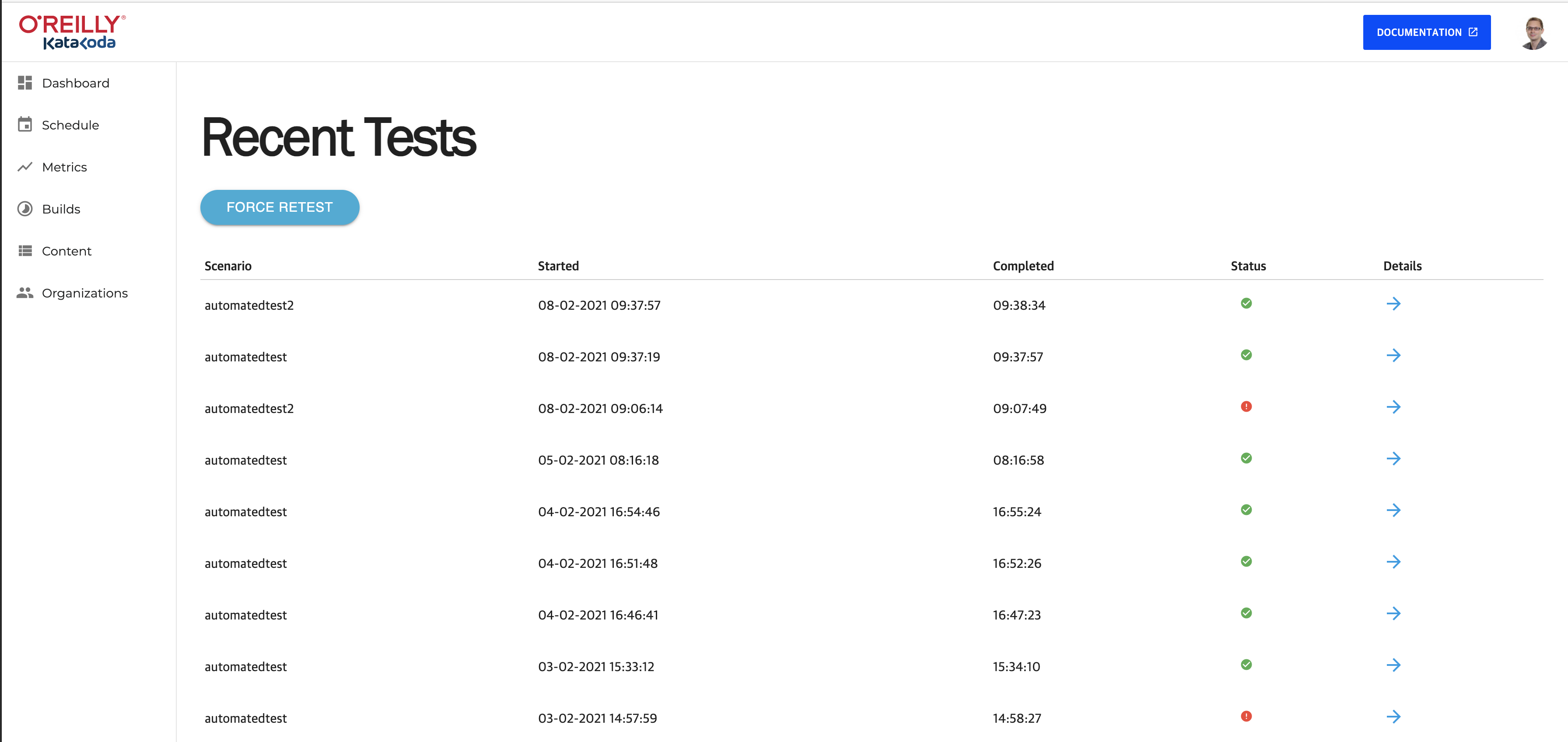

View Results

The results of the test execution can be viewed in the Dashboard. You can find test runs for your organization at https://dashboard.katacoda.com/content/testruns. A test run will be triggered for any lab that changes when you push to the lab’s repository. You can also re-run all of your tests at once by clicking the “Force Retest” button.

If an instruction-guided tests fails, look at the log messages to assess whether the problem can be resolved by adding an annotation. When a test fails, it may because of an issue with the lab instructions or an issue with the test itself. Look for screenshots of the test failure on the test result page. Also refer to the logs from the test run.

Presentation Video

This video explains what instruction-guided testing is and how to use it. (10 minutes)

Demo Video

This shows how to add instruction-guided testing to a new lab and an example usage of annotations. (25 minutes)